Deploying distributed applications and services within Azure can be cumbersome, atleast when you are not familiar with the different options/features you have available. Therefore I wanted to use to post to explain the different options we have for load balancing within Azure, both for internal services and external facing services.

Now there are three different load balancing features available directly in Azure

- Azure Load Balancer

- Application Gateway

- Traffic Manager

There is also third party solutions from different vendors available in the marketplace which has alot more features but again, this in most cases requires additional licenses and compute resources, but ill come back to those later in the blogpost.

Now there are some distinct differerences between the different load balancing features in Azure and the third party vendors.

| Service | Azure Load Balancer | Application Gateway | Traffic Manager | Third Party (NetScaler, etc) |

| Layer | Layer 4 support | Layer 7 | DNS | Layer 4 to Layer 7, DNS etc |

| Protocol support | TCP, UDP (Any applications based upon these protocol) | HTTP, HTTPS | All services which use DNS | Most Protocols |

| Endpoints | Azure VMs and Cloud Services instances | Azure VMs and Cloud Services instances | Azure endpoints, On-premises endpoints | Azure VM endpoints, On-premise, Internal Instancs |

| Internal & External support | Can be used for internal and External communication | Can be used for internal and external communication | Externally | Interal and External traffic |

| Health Monitoring | Probes: TCP or HTTP | Probes: HTTP or HTTPS | Probes: HTTP or HTTPS | Custom based, TCP, HTTP, HTTPS, Inline HTTP, PING, UDP etc |

| Pricing | Free | $0,07 per gateway-hour per INSTANCE (Medium size) which is default size | $0.54 per million queries (Cheaper above 1 billion queries) | Depending on the Vendors, regular compute instance + license from the vendor |

So depending on the requirements we have it is also possible to do a combination of the different services, and in some cases it might also be more cost effective to do a combination. For instance that we have Traffic Manager to do GEO based load balancing between different regions and that we have NetScaler HA-pair setup on each site to deliver local load balancing capabilities in each region.

Azure Load Balancing

NOTE: That Azure Load Balancing requires that we have an availability-set in place for our virtual machines that we want to load balance. If you have existing virtual machiens that you want to add to an availability set which are created using ARM you cannot do this yet.

Which as mentioned this can be created either for external or internal purposes. For this example I’m going to use an external load balancing service against a couple of web servers against an ARM deployment.

We have two IIS web servers, which are deployed in the same availability-set, which are deployed to the same virtual network. They are deployed in different network security groups, which allows us to handle ACLs based upon each vNIC.

To create an Azure Load balancer, we can just select and create a new Azure Load balancer resources from within the UI.

First we need to specify a probe, which is used to check health of the backend pool. Here we just specify what kind of protocol we want to use for the health test and port and path to check on against the backend pool.

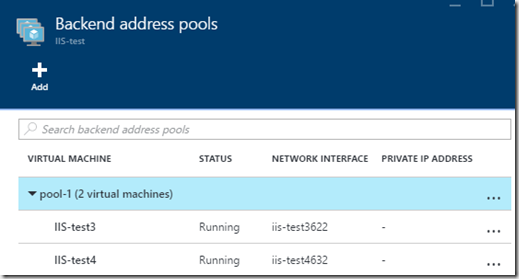

Also when setting up the Azure Load balancer we have to specify a backend pool which consists of the virtual machines we want to load balance.

Then we need to create the load balancing rules which ties all the different settings together. Here we specify the backend pool, probe, backend port (For Reverse proxy features we can for instance load balance services externally on port 8080, while the servers are listening to port 80 interally)

And we define the the session persistency. NOTE: We cannot define the load balancing technique her, for instanse based upon load or amount of connections. This can be done using PowerShell to alter the distribution mode –> https://azure.microsoft.com/nb-no/documentation/articles/load-balancer-distribution-mode/

SSL Offloading is also not supported using Azure Load balancing, it is also missing important features like Content Switching and rewrite settings.

Application Gateway

Application Gateway is a layer 7 HTTP load balancing solution, Important to note however is that this feature is built upon IIS/AAR in Windows Server

As of now it is only available using the latest Azure PowerShell, but moving forward it will become available in the portal. New-AzureApplicationGateway –Name AppGW –Subnets 10.0.0.0/24 –vNetname vNet01 or we can use an ARM template from here –> https://azure.microsoft.com/en-us/services/application-gateway/

And we can now see that the AppGW is created

NOTE: The default value for InstanceCount is 2, with a maximum value of 10. The default value for GatewaySize is Medium. You can choose between Small, Medium and Large.

Next we need to do the configuration, this is by using an XML file where the declare all the speicifcs like external ports, what kind of protocol and if for instance cooke based persistency should be enabled

The XML file should look like this

<?xml version=»1.0″ encoding=»utf-8″?>

<ApplicationGatewayConfiguration xmlns:i=»http://www.w3.org/2001/XMLSchema-instance« xmlns=»http://schemas.microsoft.com/windowsazure«>

<FrontendPorts>

<FrontendPort>

<Name>FrontendPort1</Name>

<Port>80</Port>

</FrontendPort>

</FrontendPorts>

<BackendAddressPools>

<BackendAddressPool>

<Name>BackendServers1</Name>

<IPAddresses>

<IPAddress>10.0.0.5</IPAddress>

<IPAddress>10.0.0.6</IPAddress>

</IPAddresses>

</BackendAddressPool>

</BackendAddressPools>

<BackendHttpSettingsList>

<BackendHttpSettings>

<Name>BackendSetting1</Name>

<Port>80</Port>

<Protocol>Http</Protocol>

<CookieBasedAffinity>Enabled</CookieBasedAffinity>

</BackendHttpSettings>

</BackendHttpSettingsList>

<HttpListeners>

<HttpListener>

<Name>HTTPListener1</Name>

<FrontendPort>FrontendPort1</FrontendPort>

<Protocol>Http</Protocol>

</HttpListener>

</HttpListeners>

<HttpLoadBalancingRules>

<HttpLoadBalancingRule>

<Name>HttpLBRule1</Name>

<Type>basic</Type>

<BackendHttpSettings>BackendSetting1</BackendHttpSettings>

<Listener>HTTPListener1</Listener>

<BackendAddressPool>BackendPool1</BackendAddressPool>

</HttpLoadBalancingRule>

</HttpLoadBalancingRules>

</ApplicationGatewayConfiguration>

If we want to change the config file we can use the command Set-AzureApplicationGatewayConfig -Name AppGwTest -ConfigFile “D:config.xml”

You can also create custom probes against each backend –> https://azure.microsoft.com/nb-no/documentation/articles/application-gateway-create-probe-classic-ps/

Traffic Manager

Traffic Manager is a DNS based load balancing solution. It is as simple as when a client requests a particular resource, Azure will respond with one or another backend resources. Even thou it is an Azure features it can also be used for on-premises load balancing.

The logic behind it is pretty simple we create an resource in Azure which is the traffic manager resources, which will be bound to an FQDN (resourcename.trafficmanager.net) when someone asks for this particular resource the traffic manager object will look at the available endspoints that it has to determine which are healthy and not and which is the preffered endpoint for this client. The endspoint are just other FQDN which could be a resource from an Azure datacenter on an on-premises web services which is available on an external solution.

Traffic Manager can be used to direct clients to their closest datacenter as well using the default performance based routing method

So how to we use this externally? Since we can’t actually use an traffic-manager.net URL for our external users. The way to do this is to point the traffic-manager.net as an CNAME alias for the official FQDN. So for instance www.msandbu.org would be a CNAME alias to iis.traffic-manager.net which would again resolve into our endpoints which might be another address entirely, dependong on the routing method and availability.

So now we have gone trough all the different load balancing features in Azure, can we combine and mix different load balancing features to achive even more uptime and better performance? Yes!

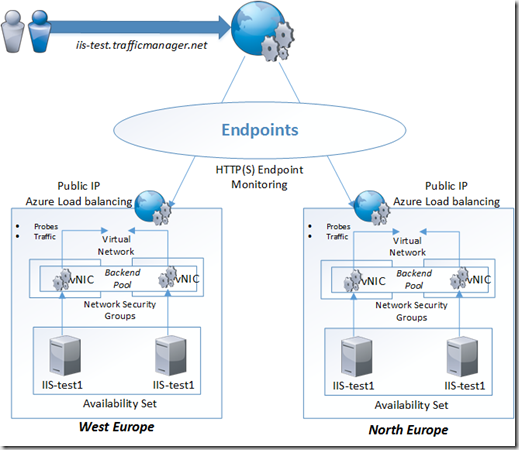

A good example is using Traffic Manager in Combination with Azure Load Balancing, think about having an e-commerence solution where we have multiple instances deploying across different Azure regions.

Where we can for instance have Traffic Manager to load balance between different regions which will point the end-user to the closest location and from there we have Azure Load Balancing to load balance between resources inside each region. This is by using the combination of DNS + TCP based load balancing.

Now since we have all these nifty features in Azure why would we even need third party vendor load balancing features at all ?

- Sure Connect (Meaning that the load balancing will queue incoming requests that the backend resources are unable to process)

- Better granular load balancing (For instance load balancing with custom probes and monitors, SIP traffic, Database load balancing, group based load balancing on multiple ports. Different load balancing methods)

- Content switching (Allows us to load balance requests based upon which URL for instance a end-user is going to)

- Web Application Firewall capabilities (Now Azure only has Security Groups ACLs but it has no deep insight into what kind of traffic is hitting the external services, most ADCs have their own WAP feature which can be used to migiate attacks)

- Rewrite and Responder policies (Being able to alter HTTP requests inline before hitting the services, when moving from one site to another for instance without needing to change the external FQDN, or changing HTTP headers) We can also use Responder policies to respond directly to blocked endpoints

- HTTP Caching and Compression (Most ADC’s can cache often access data, it can also compress outbound data in order to optimize traffic flow)

- Web Optimization (In most cases these solutions can also do optimization of the web traffic going outbound to further optimize the traffic)

- GSLB (Most ADCs also have their own Global Server Load balancing features which does the same feature as traffic manager does)

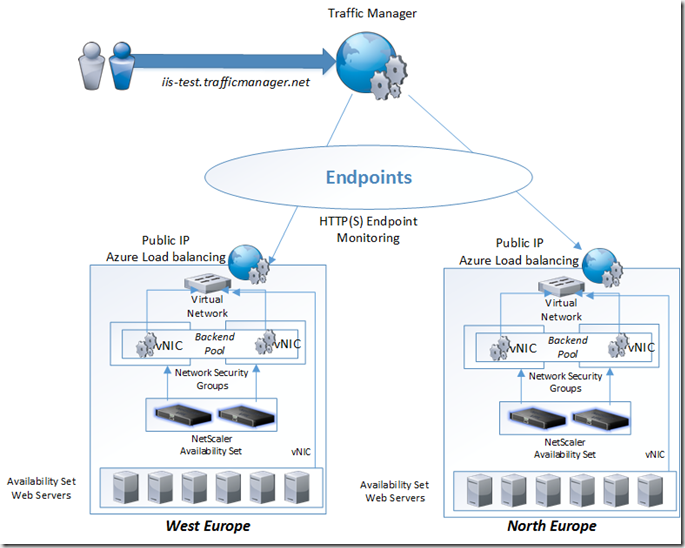

Now most of these solution are available from the Azure marketplace, and most cases require their own license as well but they all run as a virtual machine instance and has the same limitations as they do. So how would they fit in?

In most cases it will be a combination of many elements. Since the ADC runs as virtual appliance they have the same limitations. They need to be placed in their own availbility set. We in most cases cannot use their built-in HA feature because of the Azure networking limitations for failover, therefore we need to use the Azure Load balancing do distribute traffic between them and use the probe to check if they are online or not. Also in this scenario we have all web servers and NetScaler in the same virtual network, but only the NetScaler will be bound to the Azure Load balancing as the backend pool, since the NetScaler will handle internal communication with the web servers

Now we have a 3 way load balancing feature setup, so an example traffic flow would look like this.

1: User requests test.msandbu.org

2: Traffic Manager responds with a Public IP from the Azure region which is closest the end-user

3: User initiats connection with the IP closest in the Azure region

4: The Azure Load balancing feature which serves the VIP looks at the connection and fowards it to one of the active NetScalers

5: The NetScaler looks at the request and travers any policies for load balancing, content switching, url rewrites or WAP policies

6: The traffic is served from one of the web-servers back to the NetScaler and back to the client.

So now we have an combination of DNS, TCP, and HTTP / SSL load balancing to make sure that the content is optimized when deliverd.