So another blogpost in this storage series with Hyper-V, in the previous posts I discussed a bit about what features Hyper-V has and the issues with them. Well time to take that to the next level. Just to show how Nutanix solves the performance issues and how Microsoft does it with their Windows Server features.

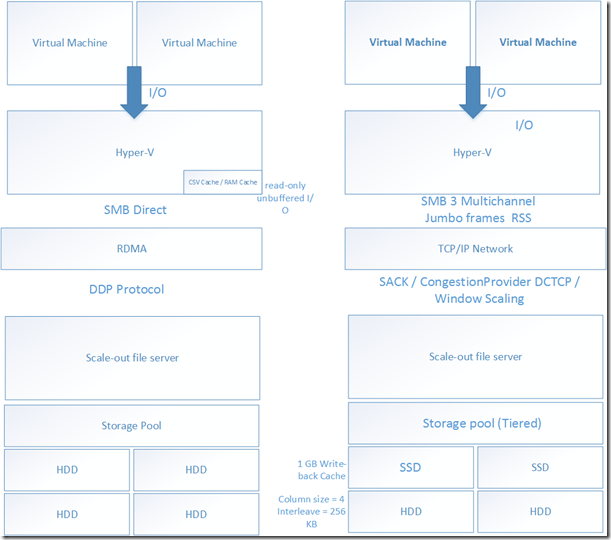

First of we have the native capabilities with Windows Server and Storage Spaces. We can benefit from SMB 3 and for instance mutlichannel with RSS and Jumbo frames which allows for much less overhead in a TCP network, of course it requires some knowledge on congestion algoritms to use as well to be able to use the full troughput

We can also use tiering in the back-end with the default write-back cache feature (which by default is on 1 GB) and during night the tiering feature run an optimization task that moves the hot data to the SSD tier and the cold data to the HDD tier.

On the other hand we can have a RDMA deplouyment which in essence removes the TCP/IP stack completly and does zero-copy network capabilities, and we can use this in conjunction with CSV cache which only provides benefits for read-only unbuffered I/Os in RAM on the host, this feature can be enabled on a CSV disk level and is integrated into failover cluster manager and is leveraged on all the hosts in a cluster. but… this feature is disabled for a tiered stoarge space CSV therefore they can not be both activated on the same deployment.

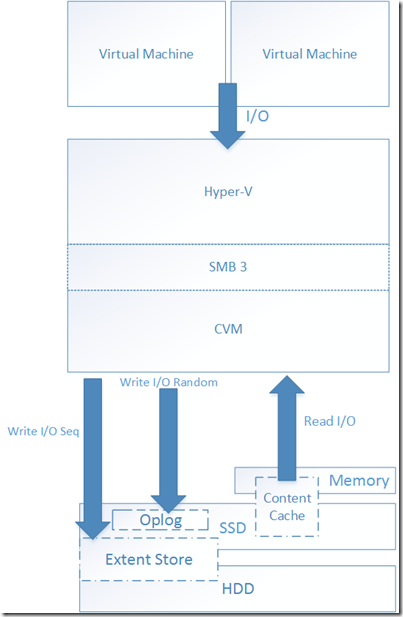

In the Nutanix I/O Path things are a bit different, since the CVM (Controller VM) serves content locally from the node to the hyper-V host using SMB using disk passtrough locally.

The I/O fabric in a Nutanix node consists of many different logical stores. First of we have the Content Cache which is an deduplicated read cache which consists of both memory and SSD. Which is serverd from the memory of the CVM. Here we have the ability to leverage from inline deduplication.

Then we have the OpLog which is built to handle random I/O, when dealing with bursts of random I/O it coalesce them and then sequentially drains it to the other Store (Extent Store) The oplog is on the SSD tier. In case of sequencial Write I/O the Oplog is bypassed and is then writen directly to the Extent Store. The Oplog is also replicated to one or more nodes in a cluster to handle high-availabilty.

The Extent Store serves as persistent data storage in a Nutanix node, which consists of SSD and HDD. Data coming into the extent store is either directly as sequential write I/O or drained from the Oplog. The Extent store can also leverage from deduplication, this is a cross cluster deduplication feature, meaning that all nodes participate.

So as we can see Nutanix leverages tiering, deduplication, in-memory caching while maintaining availability for data across nodes in a cluster, and combining this with data locality to deliver the lowest form of latency.