Many are thinking about moving to the cloud or at least look at the possbilities, and some might wonder can my workload function in the cloud ? or perhaps you are thinking of deploying Citrix in the cloud?

For many the failure of Citrix implementations are tied to the SAN or perhaps the IOPS to the SAN.

So I took Azure for a test-drive to see how well the storage there handles IO.

For those who are unfamiliar with IaaS on Azure, the VMs have 2 disks by default.

1: is OS disk which like the familiar System Disk. (This is labeled c:)

2: is the temporary disk (The SWAP file is placed here, this disk is not persistent)

3: Data disks (Which are not included when you create a VM you have to add on later) and depending on which VM instance you create you get a limit of how many data disks you can add.

So as of now I have tested a small VM in Azure and wanted to determine (is this VMs slower then an on-premise VM)

In teory the VM should be limited to max 500 IOPS on a data disks

http://msdn.microsoft.com/en-us/library/windowsazure/dn197896.aspx

And remember if you have issues with limited capacity, check what VM instance you are running. They have a limit in network bandwidth.

All of the disks by default are set to 512 sector size in Azure, for some comparison I did the similar IOmeter tests from my personal laptop running a top notch SSD disk from Samsung.

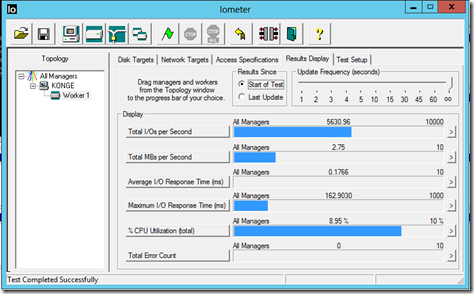

First I did a 100% READ IO operation using 512 sector size in IOmeter.

The System Disk on the VM in azure operated actually quite well, might have something to do with the cache on the system disk.

But again is nothing compared to the SSD drive on my computer.

And I also attached an empty data disk without not cache and doing a 512 100% read IO

Ouch, this is similar to a 10k SAS disk deliver in terms of performance, lets try another data disk and enable write and read cache and do the similar READ test.

Clearly it has its perks, WRITE/READ cache is enabled for the Operating System Disk.

Using the read cache will improve sequential IO read performance when the reads ‘hit’ the cache. Sequential reads will hit the cache if:

- The data has been read before.

- The cache is large enough to hold all of the data.

Now it looks like I did not push the limit enough, it seems that the cache is equal to about 2 GB since after running HD tune I saw a decline in performance after 2 GB.

This is the regular data disk without cache

This is the data disk with cache (the horizontal line is GB)

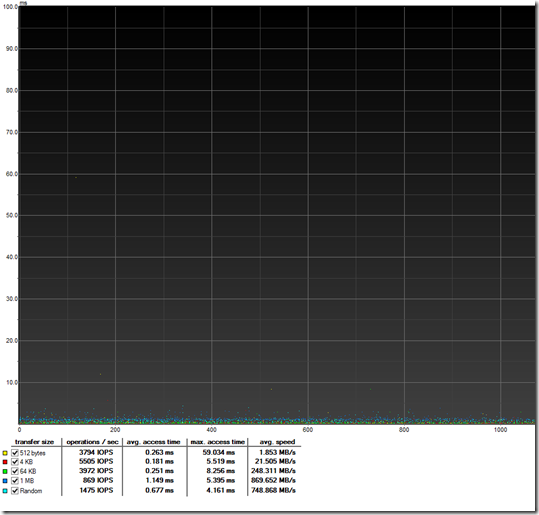

I also did some IOPS testing from here as well. Data drive without cache

Random IO here is equal to what we got in IOmeter, lets try with the cache data disk.

Now this is my laptop SSD drive (picture down below), and as you can see the cache data drive has better performance since it is using RAM as cache.

Now lets again test some file benchmark testing of files at 500mb.

The first one is my SSD drive

This is the data drive with cache.

The regular data drive.

Now for a twist, I removed the data disk without cache and I added another data disk with full cache. I took the two data disk with cache and coverted them to a raid 0 volume and I ran the same tests again.

Some different results. When I get to 1MB transfer sizes and RANDOM then I get a huge bandwitdh increate, but on the lower blocks I see no performance increase.

And in regular file transfer benchmarking I actually see a decline in performance.

Stay tuned for more! Im going forwards to setup a XenDesktop arctitechture and to see it in real life.

Very nice read thnx!

Thanks Barry! 🙂

Thank you for your blog! Are you going to examine XenDesktop (or XenApp) on Azure in the next time? I am myself working on a POC for XenApp on Azure. As far as I can see the real world performance of Azure is not too impressive, compared to the setup we have on premise.