For those that have been working with Azure for some time there are some challenges with delivering enough performance for a certion application or workload.

For those that are not aware Microsoft has put limits on max IOPS on disks which are attached to a virtual machine in Azure. But note these are max limits and not a guarantee that you get 500 IOPS for each data disk.

Virtual Machine instance

Basic ( 300 IOPS) (8 KB)

Standard ( 500 IOPS / 60 MBPS) (8 KB)

There is also a cap for a storage account for 20,000 IOPS.

In order to go “past” the limit, Microosft has mentioned from time to time to use Storage Spaces (which is basically a software RAID solution which was introduced with Server 2012) in order to spread the IO load between different data disks. (Which is a supported solution)

http://blogs.msdn.com/b/dfurman/archive/2014/04/27/using-storage-spaces-on-an-azure-vm-cluster-for-sql-server-storage.aspx “physical disks use Azure Blob storage, which has certain performance limitations. However, creating a storage space on top of a striped set of such physical disks lets you work around these limitations to some extent.”

Therefore I decided to do a test using a A4 virtual machine with 14 added data disks and create a software pool with striped volume and see how it performed. NOTE that this setup was using regular storage spaces setup which by default uses a chuck size of 256 KB blocks and column size of 8 disks.http://social.technet.microsoft.com/wiki/contents/articles/11382.storage-spaces-frequently-asked-questions-faq.aspx#What_are_columns_and_how_does_Storage_Spaces_decide_how_many_to_use

I setup all disks in a single pool and created a simple striped volume to spread the IO workload across the disks (not recommended for redudancy!) and note that these tests were done using West Europe datacenter. And when I created the virtual disk I needed to define max amount of columns across disks.

Get-storagepool -FriendlyName test | New-VirtualDisk -FriendlyName “test” -ResiliencySettingName simple -UseMaximumSize -NumberOfColumns 14

Also I did not set any read/write cache on the data disks. Now I used HD tune pro since I delivers a nice GUI chart as well as IOPS.

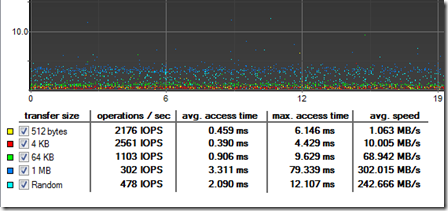

For comparison this is my local machine with an SSD drive (READ) using Random Access testing.

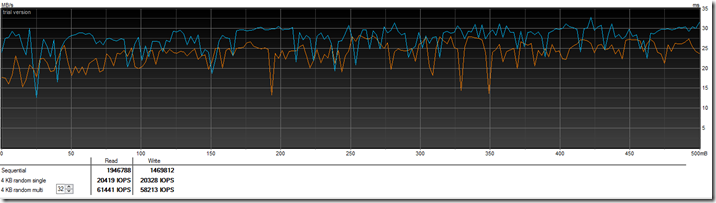

This is from the Storage space (simple virtual disk across 14 columns with 256 chucks) (READ)

This is from the D: drive in Azure (note that this is not a D-instance with SSD)

This is from the C: drive in Azure (which by default has caching enabled for read/write)

Then when doing a regular benchmarking test with Writing a 500 MB file to the virtual volume on the disk.

Then against the D drive

Then for C: which has read/write cache activated I get some spikes because of the cache.

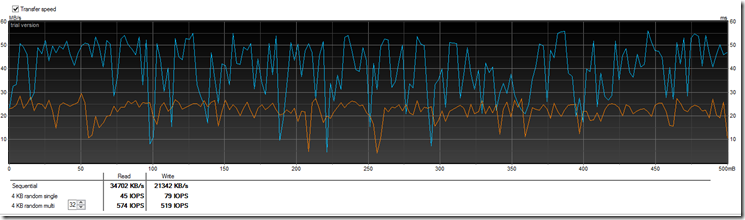

This is for a regular data disk which IS not in a storage pool. (I just deleted the pool and used one of the disks there)

This a regular benchmark test.

Random Access test

Now in conclusion we can see that a storage space setup in Azure is by few precentage faster then a single data disk in terms of performance. The problem with using Storage Spaces in Azure is the access time / latency that these disks have and therefore they become a bottleneck when setting up a storage pool.

Now luckily, Microsoft is coming with a Premium storage account which has up to 5000 IOPS pr data disk which is more like regular SSD performance which should make Azure a more viable solution to deliver application that are more IO intensiv.