On 20th of October this year Microsoft and Dell announced the coming of their new platform Cloud Platform Systems. ( oddly enough just two months after VMware announced EVO:RAIL and EVO:RACK)

This platform is aimed at customers that want a fully functional private cloud offering, or even hosting providers that want a fully tested, validated platform.

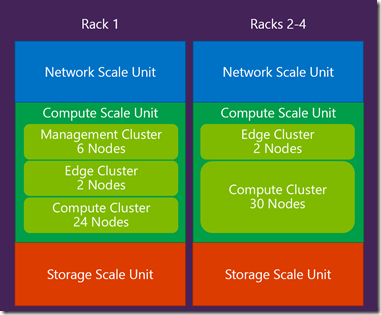

CPS is a offering that either stays within 1 rack and can be deployed up into 4 racks. The platform consists of only Dell hardware and Microsoft software stack like Hyper-V, System Center and Azure Pack.

So within a rack we have the following hardware offering

- 512 cores across 32 servers (each with a dual socket Intel Ivy Bridge, E5-2650v2 CPU)

- 8 TB of RAM with 256 GB per server

- 282 TB of usable storage

- 1360 Gb/s of internal rack connectivity

- 560 Gb/s of inter-rack connectivity

- Up to 60 Gb/s connectivity to the external world

So what kind of real hardware is behind this rack?

Networking

5 x Force 10 – S4810P

1 x Force 10 – S55

Compute Scale Unit (32 x Hyper-V hosts)

Dell PowerEdge C6220ii – 4 Nodes per 2U

Dual socket Intel IvyBridge (E5-2650v2 @ 2.6GHz)

256 GB memory

2 x 10 GbE Mellanox NIC’s (LBFO Team, NVGRE offload) (Tenant

2 x 10 GbE Chelsio (iWARP/RDMA) (SMB 3.0 based shared storage)

1 local SSD 200 GB(boot/paging)

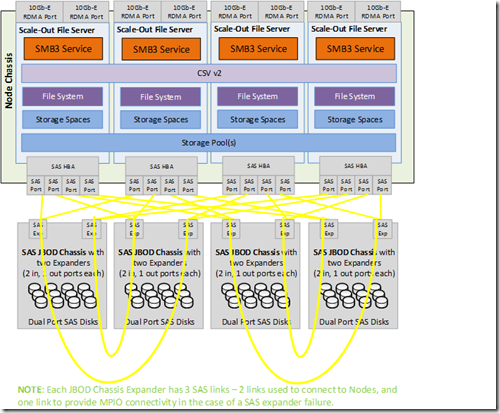

Storage Scale Unit (4 x File servers, 4 x JBODS)

Dell PowerEdge R620v2 Servers (4 Server for Scale Out File Server

Dual socket Intel IvyBridge (E5-2650v2 @ 2.6GHz)

2 x LSI 9207-8E SAS Controllers (shared storage)

2 x 10 GbE Chelsio T520 (iWARP/RDMA)

PowerVault MD3060e JBODs (48 HDD, 12 SSD)

4 TB HDDs and 800 GB SSDs

A single rack can support up to 2000 VM’s (2 vCPU, 1.75 GB RAM, and 50 GB disk). And like with Vmwares EVO rail offering, this platform is finished integrated, all you have to do is connect network and power and you are good to go. So

On the Storage side, CPS is set up using Storage spaces with tiering and 3-way mirror for tenant workloads and for backup we have dual parity. Note also that backup pool is configured to 126 terabyte and is also setup to use deduplication. Tenant workloads is setup to use 156 TB of data.

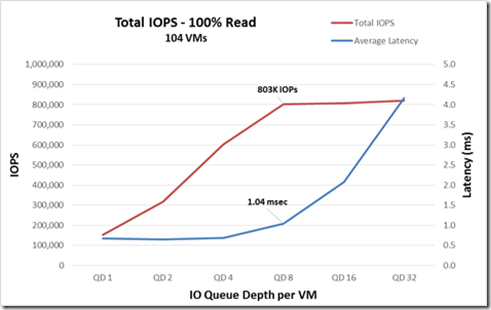

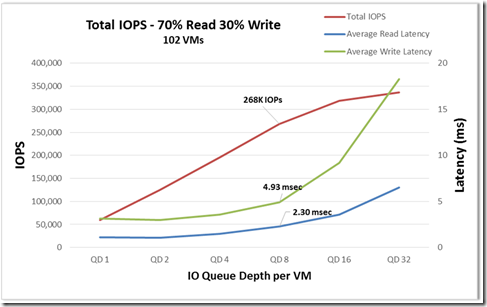

As for performance, Microsoft has calculated how much IOPS we can get from a CPS system.

Totalt IOPS 100% READ

Totalt IOPS 70% read and 30% Write

These tests were run on 14 different servers, targeting the storage.

And each Hyper-V node is accessing SMB based JBOD storage using RDMA with has low latency traffic. all storage traffic is also using EMCP to load balance traffic via the Force10 switches. Where we have 2x 10 GB in LACP. Also that there is a flat layer 3 network between the switches, so not issues with STP and with using ECMP we have redudant links between all the hosts.

Storage Spaces configuration

3 x pools (2 x tenant, 1 x backup)

VM storage:

16x enclosure aware, 3-copy mirror @ ~9.5TB

Automatic tiered storage, write-back cache

Backup storage:

16x enclosure aware, dual parity @ 7.5TB

SSD for logs only

24TB of HDD and 3.2TB of SSD capacity left unused for automatic rebuild

Available space

Tenant: 156 terabytes

Backup: 126 terabytes (+ deduplication)’

Note that interleave on the storage space setup is set at 64KB and number of columns is 4 for the tenant pool.

If you want to know how much this beaty costs it is about $1,635,600 which I havent found anywhere but from this performance article (http://www.valueprism.com/resources/resources/Resources/CPS%20Price%20Performance%20Whitepaper%20-%20FINAL.pdf) and note that this does NOT include Microsoft software at all.

F5 VIPRION 2200 chassis is also included as an load balancer module and is also setup and integrated within System Center.

So this is some of the capabilities on the Cloud Platform System, so if you want to order a rack of this, everything will be fully integrated, installed and configured before you order it. Note that it does not give a nice web portal like EVO rack does. But uses the familiar System Center tools and Hyper-V. Every component which is included is setup clustered and in high-availabilty on the shared storage. There are only 3 components which are not setup on the shared storage which is the AD-controllers, these are setup locally on three different physical servers.

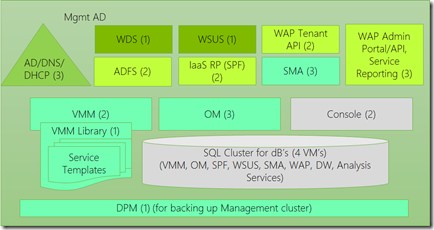

So within the first rack we have the management cluster which consists of 6 nodes (these are not needed in the 2 – 4 racks.) but includes services like System Center, SQL and so on.

So it is going to be really interesting to see what the response is for this type of pre-configured converged infrastructure. (I know VCE has been on the market for some time, so therefore it is going to be extra interesting) and also how this platform is going to evolve over time, with now vNext capabilities and with Dell have a major launch cycle with FX series and so on.

Hello,

Have you encountered the configuration for the F5 VIPRION? Escpecially the part for terminating IPSEC tunnels and making them connect to internal HVN networks through NVGRE routing domains in the F5?

Hi Magnus,

No I haven’t but I have some resources who have, still a issue ?

Yes, unfortunatelly still an issue. We have configured the SCVMM and we can get traffic flowing from within the HVN to Internet and trafic to services (web etc…) to servers inside but we are still stuck with the IPSEC config.